If your org is anything like mine, your org is constantly looking for efficiencies, particularly improvements to the Sales Funnel and Sales Tasks.

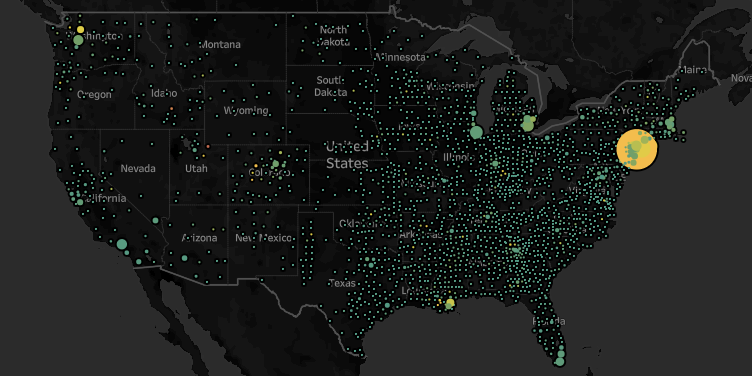

A few years back, my team, which is a sales-oriented Business Intelligence team, was tasked with building out a series of embedded dashboards within our SFDC instance. The goal was simple: push data to the Sales Reps and Account Managers within their platform. If the sales team has an account page open, they should see that account's metrics. This keeps everyone on the same page, in the same tool, and focused on Sales Tasks, rather than herding reports and filtering reports, reconciling data and chasing it around, week after week.

The Plan^tm

To deliver on our goal, we brought together a significant number of resources.

As Dashboard Developers, we wanted to standardize look and feel to SFDC's environment.

As Dashboard Developers, we participated in discussions to gather business requirements from our Sales Enablement team and the Sales Staff. The former took on the project management role and did a great job in facilitating our meetings with both sales executives and our sales teams.

Key to these discussions were the refinement and standardization of KPIs across regional sales teams and product owner silos. Based on these meetings, we would ultimately include a few "have-your-cake-and-eat-it-too" type of considerations, where we'd use parameters to allow for both gross and net, or to allow for different filters (such as including consumer, or flash or excluding one or both).

Fortunately for us, a significant share of our dashboards were based in some existing dashboard or report, and so the effort was focused primarily around adding a few dimensions to various data, standardizing data sets wherever possible, and applying a standard look-and-feel.

Row Level Security Explained

We also made sure to tack on row-level security, which is a pretty cool feature. I wish I could take credit for this, but it was a collaboration between IT, Tableau and driven principally by my boss and mentor, Mike Mixon.

As mentioned briefly, part of our requirements were row level security for Account Reps and Regional and Global access based out of our LDAP Directory. This was done using a boolean f statement. This first true|false calculation is what gets imposed as a data source filter on the published data source on Tableau Server.

I abbreviated the logic for readability:

[User Check]

IIF(

(ISMEMBEROF("Global Tableau User"))

or

(ISMEMBEROF("AMER Tableau User") AND [Region] = "AMER")

...

(ISMEMBEROF("JAPAN Tableau User") AND [Region] = "JAPAN")

or

(CONTAINS([ID Match],[User Logged In])),1,0) = 1

At the end, you'll notice there's a couple additional calculations that need explaining.

[User Logged In] is simply:

USERNAME()

[ID Match]:

// MID Within a Long List of IDs ([Associated IDs], returns the location of [User Logged In]

// FIND Finds the [User Logged In] location within [Associated IDs]

// LEN The Length of [User Logged In] ensuring a complete match.

IF [Find] >0 THEN

MID([Associated IDs],

FIND([Associated IDs],[User Logged In]),

LEN([User Logged In]))

ELSE ""

END

You might ask, "how did we get a field of "Associated IDs"? Well, for that we pulled the data from SFDC, using some help from IT, to determine who each Account Owner was, and then traversed up the sales hierarchy for each Account Owner to determine their manager, and then their manager, etc, iterating up the chain of command.

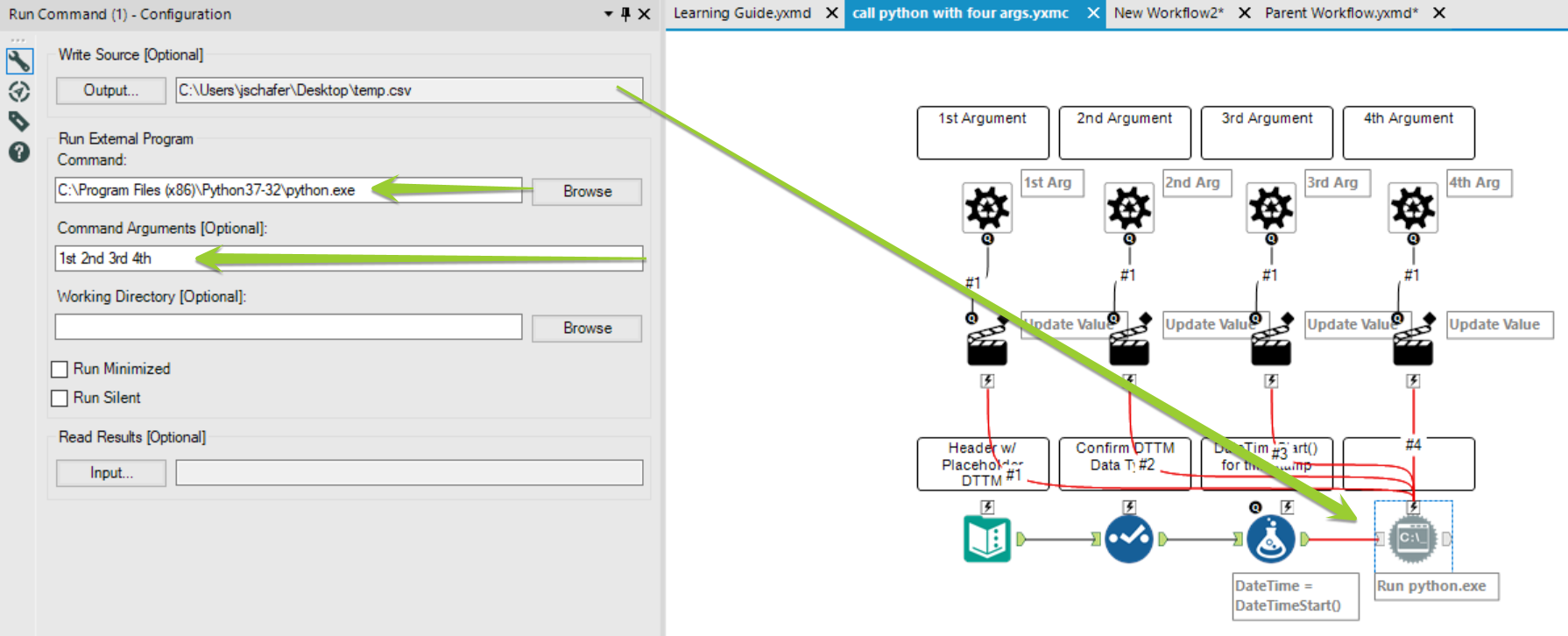

Starting with an outputted long, narrow list of each combination of Account and Personal ID, with authority to see that account, we then did a relatively simple transform in Alteryx to concatenate each and every ID that has visibility rights into a given Account. That was then joined at the Account ID level to the data set as part of the data preparation for each pertinent data source.

Still to Document:

Actions

(how to filter, and how url-out-to-salesforce (create tasks, open oppos, etc). I have already talked a bit about how to make a URL action work, in a previous blog post here. Basically the only difference is that instead of passing <stock> combined with a google finance URL, you'd pass an opportunity record

Data Governance

SFDC matched to EDW, make sure account ownerships, for example, are correct and up-to-date. We have guard rails in place, for example, to ensure that accounts owned by inactive users are flagged and sent to Sales Managers.

Caveats:

A little shop-talk: So far, we're an exclusively on-prem Tableau shop. I have heard that Tableau Online makes this a bit easier with regard to authentication, which is not my team's purview.

For Tableau on-prem shops, there's a tool called Sparkler that passes authentication from Salesforce into Tableau's on-prem server. For us, it hasn't been a flawless experience as authentication technology evolves, so if you're on-prem, suffice it to say that you'll need to allow for some resources for your authentication folks, so as to integrate the authentication process in a seamless state.

In our experience, this isn't a one-time IT investment, but an integration capability that needs to be kept up-to-date as authentication standards and tableau versions evolve over time. I'm not certain what a cloud-to-cloud integration looks like, so your mileage may vary if that's the sort of landscape you're in.

Our mandate includes phone, tablet, and desktop support from within Salesforce. Far and away the trickiest to implement is Tableau via Phone via Salesforce App. There seem to be limitations to what the Salesforce Canvas (think "browser") is capable of. We still have a few bugs there, mostly around actions that involve navigation from a dashboard, to an SFDC record (via a URL Action in Tableau), and then back to the dashboard. This seems to be more common on the iPhone platform than the Android platform. If you're performing the same function from within the Tableau Mobile App, there's no issue, thus the suspicion falls onto Salesforce Canvas.

Going from the Dashboard to a particular opportunity via a URL Action is wicked cool. Pre-populating a task form, based on the dashboard data is similarly awesome and pretty straightforward, once you acquaint yourself with the proper fields and how they map to your Tableau data.